Exploring Nvidia CUDA Compatibility and Issues

chipa@dtu.dk

Table of Contents

- Introduction

- Understanding Nvidia CUDA Compatibility

- Applications of Nvidia CUDA Compatibility

- Issues with CUDA Version

- Installation in Ubuntu

- Conclusion

Introduction

In today's digital landscape, where data processing and computational capacity are crucial for a wide range of applications, it is essential to make full use of hardware acceleration. Nvidia CUDA (Compute Unified Device Architecture) is a game-changing technology that unleashes the power of Nvidia GPUs (Graphics Processing Units) through massive parallel processing. In this article, we'll explore the significance, benefits, and practicality of CUDA compatibility. In addition, I will explain how to set up CUDA on Linux.

Understanding Nvidia CUDA Compatibility

CUDA compatibility means that a program or software can run on Nvidia GPUs and use their parallel processing abilities. By using the huge processing power of GPUs, CUDA allows developers speed up their algorithms and computations, which is a big improvement over traditional CPU-based processing.

Nvidia CUDA compatibility is not limited to a specific programming language. It provides developers with powerful APIs (Application Programming Interfaces) and libraries that allow them to seamlessly integrate CUDA functionality into their existing codebase. The CUDA programming model supports popular languages such as C, C++, and Fortran, making it accessible to a broad community of developers.

Applications of Nvidia CUDA Compatibility

-

Scientific and Technical Computing: CUDA compatibility is extensively used in scientific simulations, weather modeling, fluid dynamics, and molecular dynamics, among others. These fields require intensive computations, and leveraging GPUs through CUDA can significantly accelerate the processing of complex algorithms and simulations.

-

Machine Learning and Artificial Intelligence: The field of machine learning heavily relies on the efficient processing of massive datasets and complex neural networks. CUDA compatibility enables researchers and data scientists to train deep learning models faster, making GPU-accelerated machine learning frameworks such as TensorFlow and PyTorch popular choices for AI development.

-

Video and Image Processing: CUDA compatibility is widely employed in video transcoding, image recognition, and computer vision applications. The parallel processing capabilities of GPUs facilitate real-time video processing, object detection, and advanced image filtering, providing a substantial boost to multimedia applications.

-

Financial Modeling and Quantitative Analysis: Financial institutions utilize CUDA compatibility to accelerate complex risk calculations, option pricing, and portfolio analysis. By harnessing GPU parallelism, traders and analysts can perform high-speed simulations and gain insights into vast amounts of financial data.

Issues with CUDA Version

As we all knows,Tensorflow and PyTorch are extenssively used for machine learning and deep learnig model devlopement. The particular version of both are compartibible with a particular cuda version (e.g. - 10.x, 11.x or 12.x). Hence, it is necessary one has installed the required nvidia driver for the GPU with required CUDA version. This caused device failure and run time errors.

If you want to learn more about cuda toolkit and compartibility, please visit the Nvidia documentations.

Installation in Ubuntu

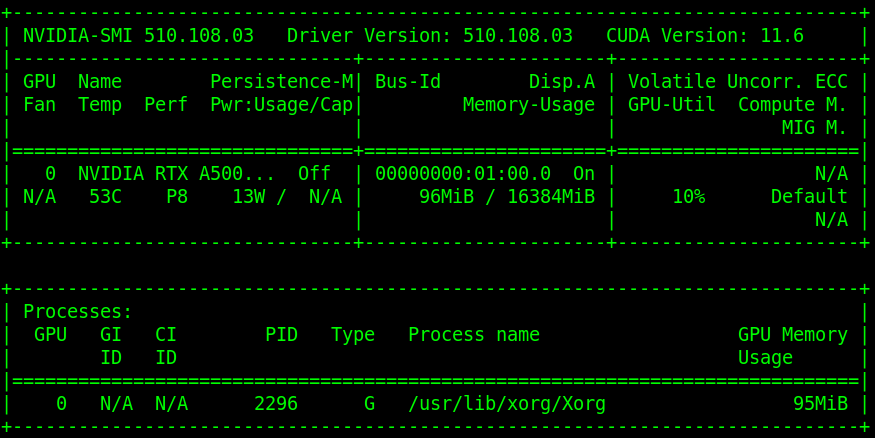

First we will check whether we have installed nvidia driver in our PC or not, using $nvidia-smi command.

nvidia-smi

Conclusion

Completing soon…

Thank you for reading my blog post! I hope you found it insightful and enjoyable. If you have any questions or feedback, please don't hesitate to reach out. I look forward to hearing from you!

[Chiku Parida]